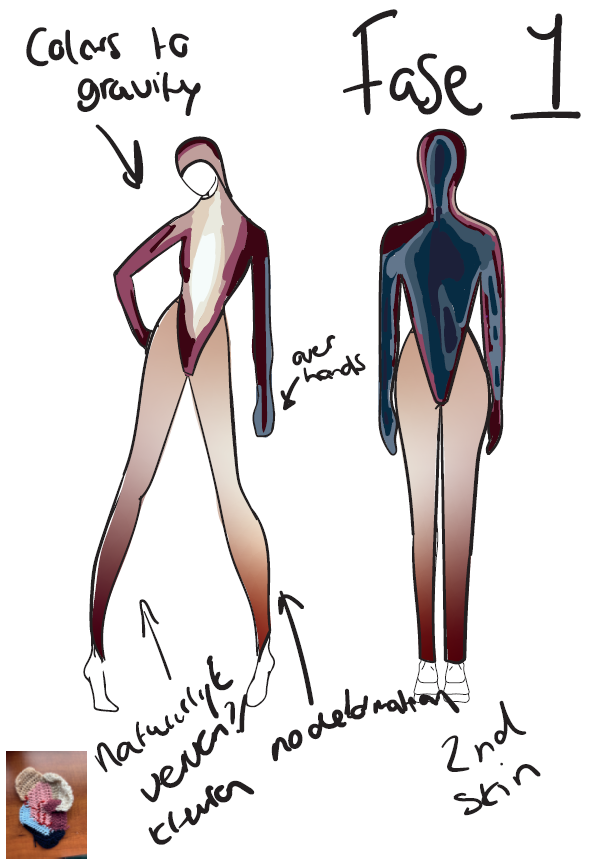

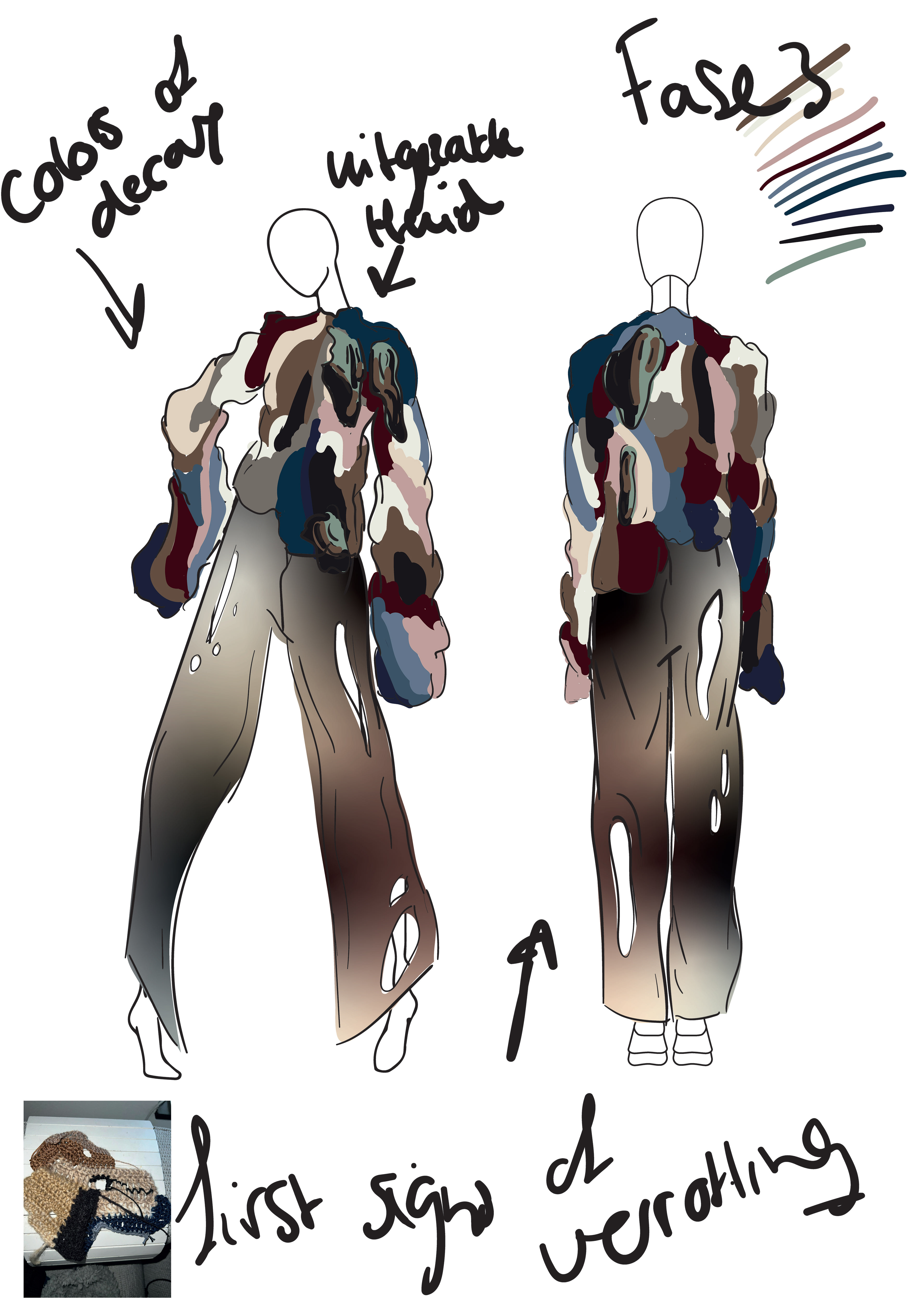

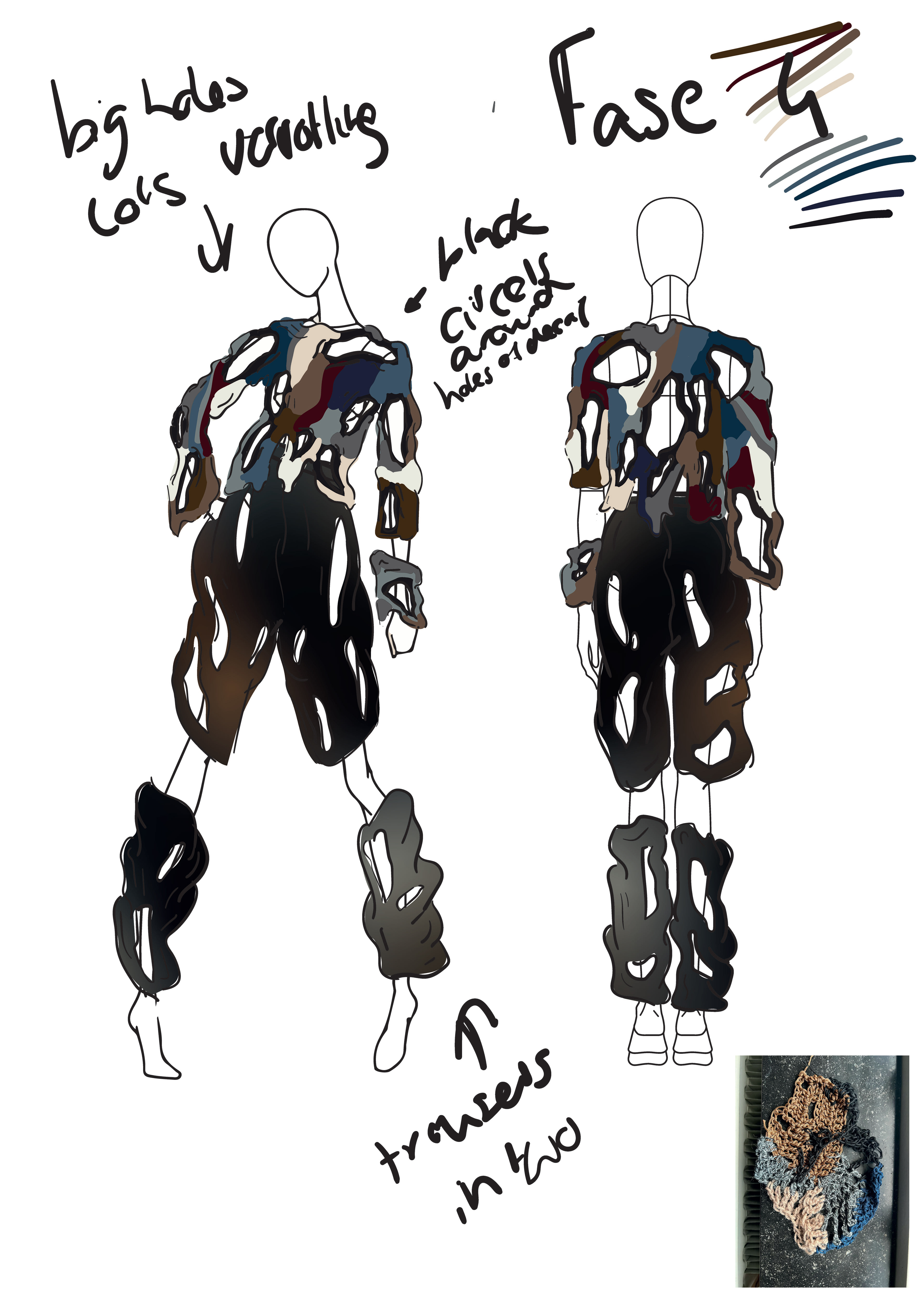

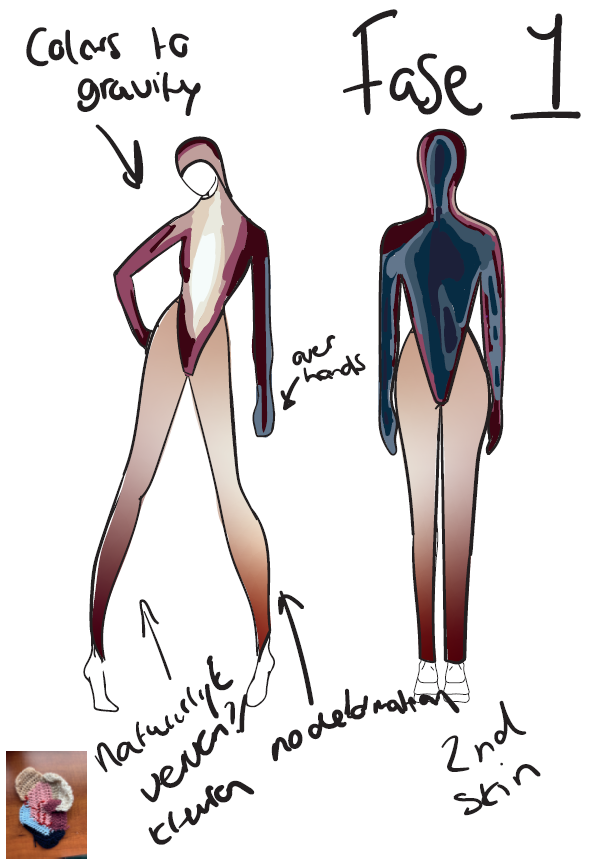

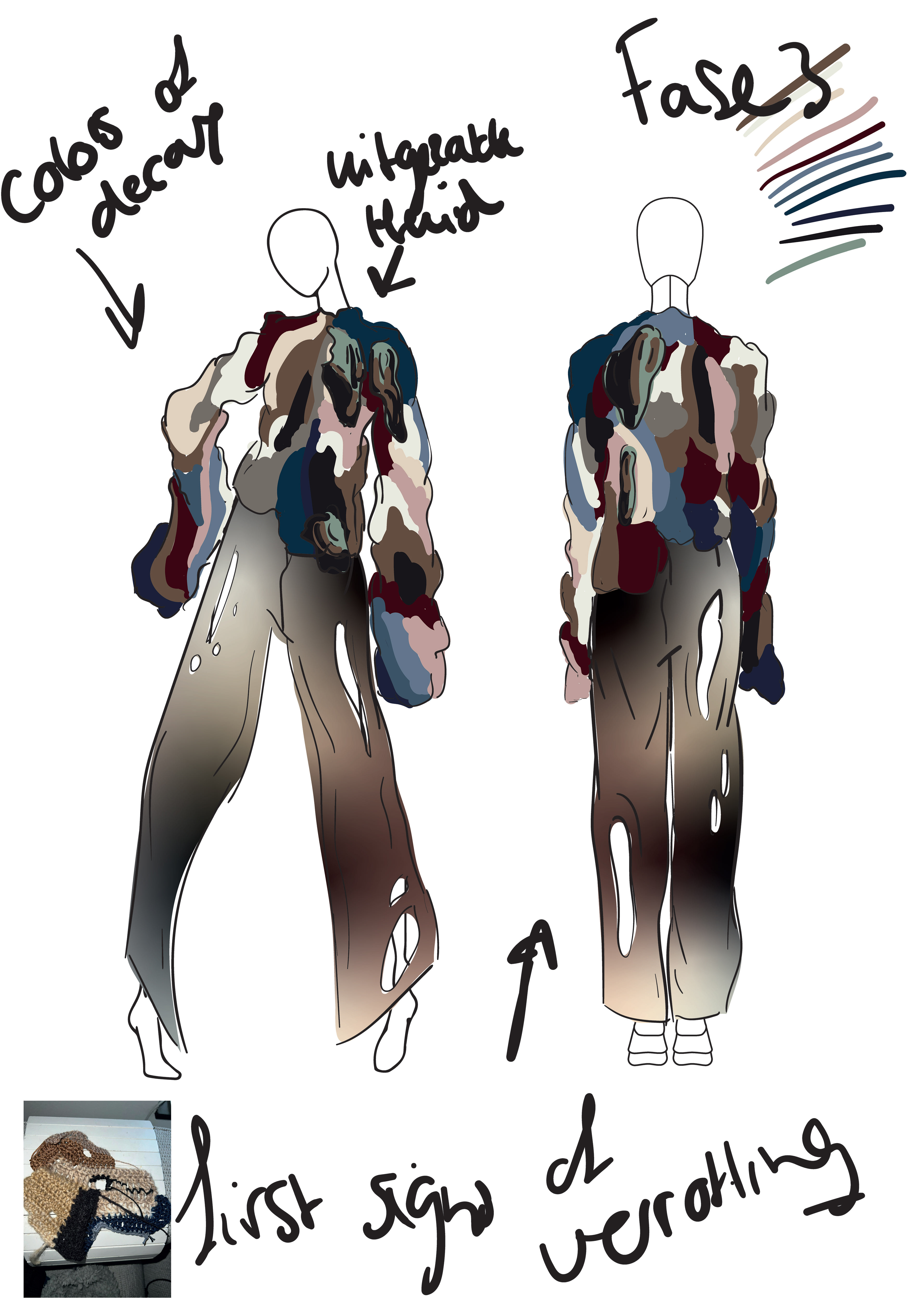

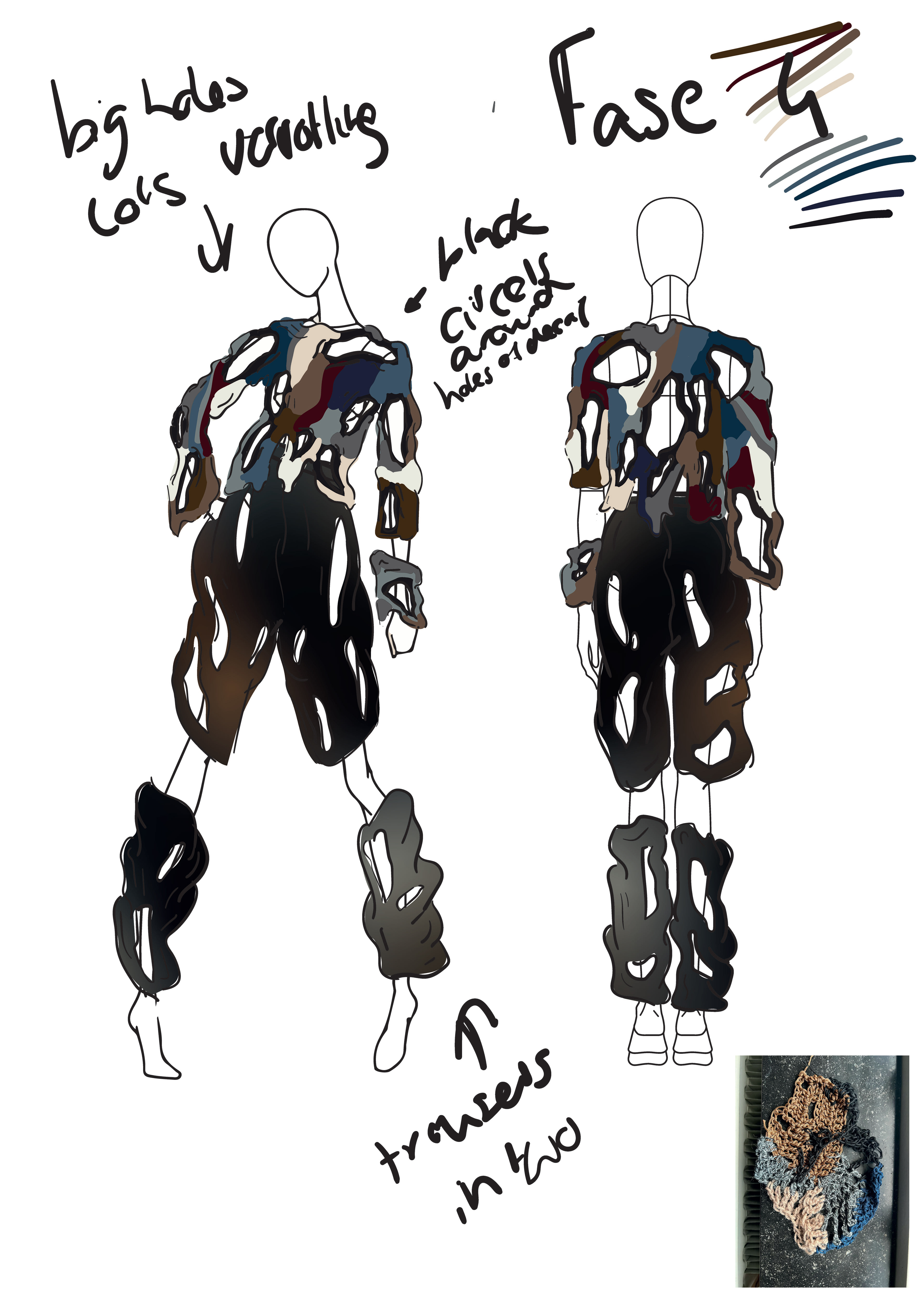

The way it’s supposed to happen… We passed that a long time ago. We find ways to manipulate nature the way it suits us. We don’t look back. The way it’s supposed to happen. What does that even mean? We are born, we live our life and then we die. That is what life means, but what happens next? Our bodies are a part of our nature. After we die, our bodies decay and will be a nutrition for new life. The way it's supposed to happen. We completely ripped that out of context. But what does a real and honest connection to nature and death mean?